Does Microsoft OneDrive export large ZIP files that are corrupt?

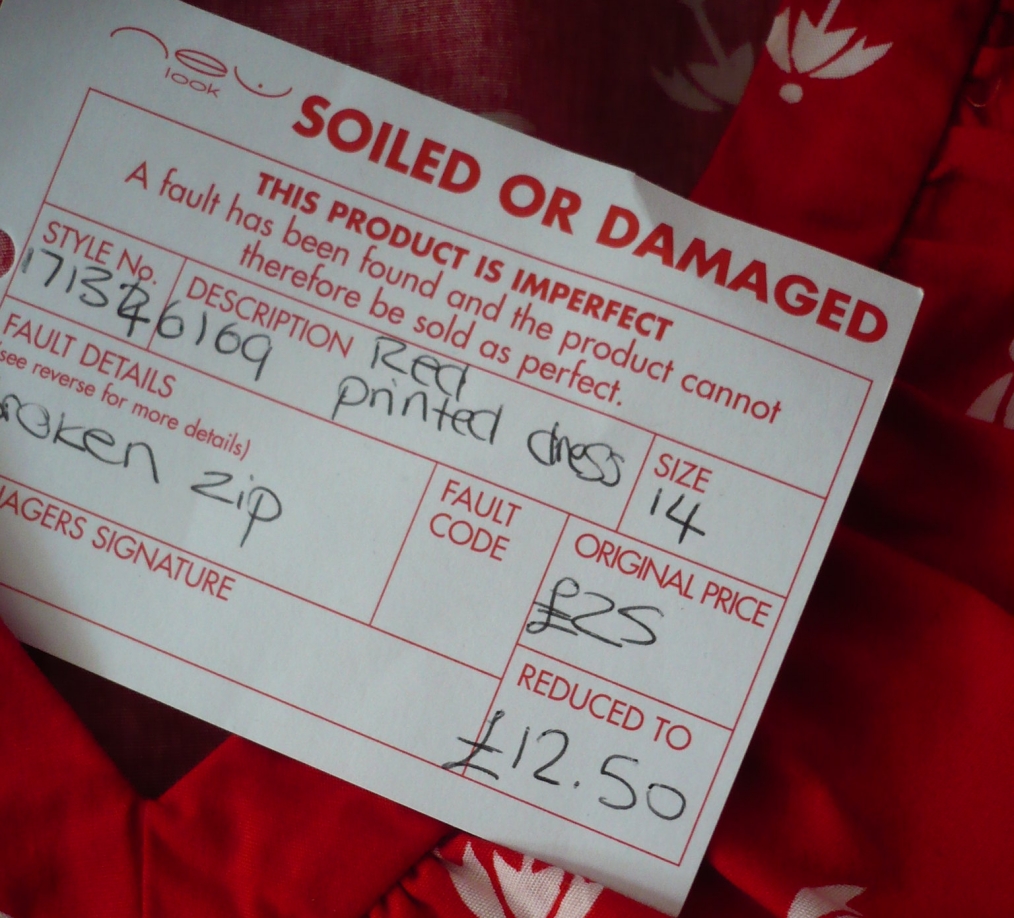

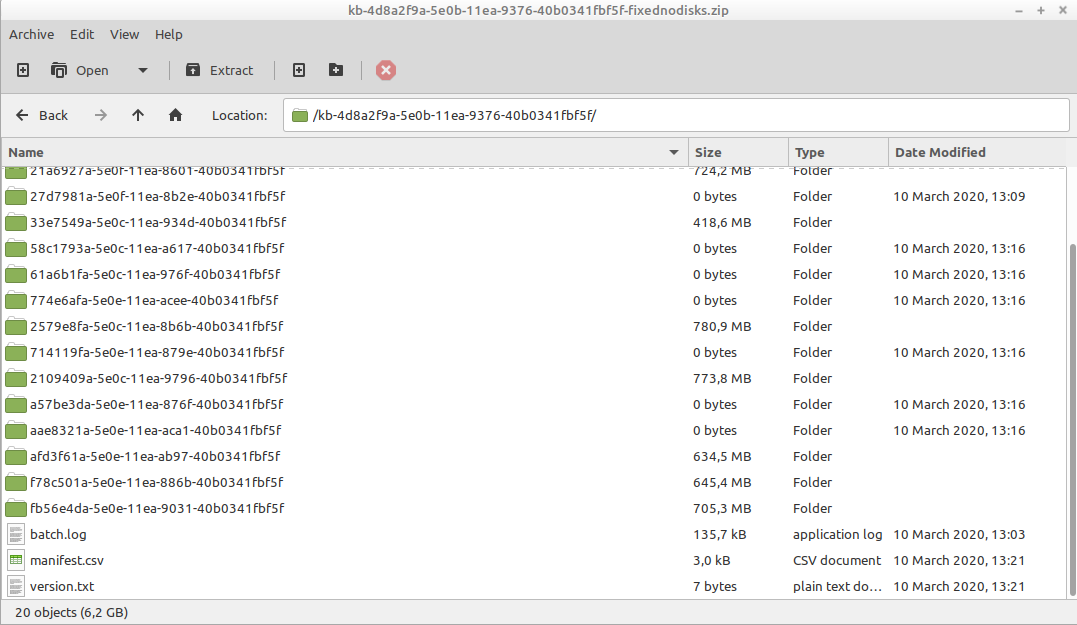

We recently started using Microsoft OneDrive at work. The other day a colleague used OneDrive to share a folder with a large number of ISO images with me. Since I wanted to work with these files on my Linux machine at home, and no official OneDrive client for Linux exists a this point, I used OneDrive’s web client to download the contents of the folder. Doing so resulted in a 6 GB ZIP archive. When I tried to extract this ZIP file with my operating system’s (Linux Mint 19.3 MATE) archive manager, this resulted in an error dialog, saying that “An error occurred while loading the archive”:

The output from the underlying extraction tool (7-zip) reported a “Headers Error”, with an “Unconfirmed start of archive”. It also reported a warning that “There are data after the end of archive”. No actual data were extracted whatsoever. This all looked a bit worrying, so I decided to have a more in-depth look at this problem.

Extracting with Unzip

As a first test I tried to extract the file from the terminal using unzip (v. 6.0) using the following command:

unzip kb-4d8a2f9a-5e0b-11ea-9376-40b0341fbf5f.zip

This resulted in the following output:

Archive: kb-4d8a2f9a-5e0b-11ea-9376-40b0341fbf5f.zip

warning [kb-4d8a2f9a-5e0b-11ea-9376-40b0341fbf5f.zip]: 1859568605 extra bytes at beginning or within zipfile

(attempting to process anyway)

error [kb-4d8a2f9a-5e0b-11ea-9376-40b0341fbf5f.zip]: start of central directory not found;

zipfile corrupt.

(please check that you have transferred or created the zipfile in the

appropriate BINARY mode and that you have compiled UnZip properly)

So, according to unzip the file is simply corrupt. Unzip wasn’t able to extract any actual data.

Extracting with 7-zip

Next I tried to to extract the file with 7-zip (v. 16.02) using this command:

7z x kb-4d8a2f9a-5e0b-11ea-9376-40b0341fbf5f.zip

This resulted in the following (lengthy) output:

7-Zip [64] 16.02 : Copyright (c) 1999-2016 Igor Pavlov : 2016-05-21

p7zip Version 16.02 (locale=en_US.UTF-8,Utf16=on,HugeFiles=on,64 bits,4 CPUs Intel(R) Core(TM) i5-6500 CPU @ 3.20GHz (506E3),ASM,AES-NI)

Scanning the drive for archives:

1 file, 6154566547 bytes (5870 MiB)

Extracting archive: kb-4d8a2f9a-5e0b-11ea-9376-40b0341fbf5f.zip

ERRORS:

Headers Error

Unconfirmed start of archive

WARNINGS:

There are data after the end of archive

--

Path = kb-4d8a2f9a-5e0b-11ea-9376-40b0341fbf5f.zip

Type = zip

ERRORS:

Headers Error

Unconfirmed start of archive

WARNINGS:

There are data after the end of archive

Physical Size = 4330182775

Tail Size = 1824383772

ERROR: CRC Failed : kb-4d8a2f9a-5e0b-11ea-9376-40b0341fbf5f/afd3f61a-5e0e-11ea-ab97-40b0341fbf5f/08.wav

Sub items Errors: 1

Archives with Errors: 1

Warnings: 1

Open Errors: 1

Sub items Errors: 1

Here we see the familiar “Headers Error” and “Unconfirmed start of archive” errors, as well as a warning about a cyclic redundancy check that failed on an extracted file. Unlike unzip, 7-zip does succeed in extracting some of the data, but seeing that the size of the extracted folder is only 4.3 GB, the extraction is incomplete (the size of the ZIP file is 6 GB!).

4 GiB size limit and ZIP64

At this point I started wondering if these issues could be related to the size of this particular ZIP file, especially since I have been able to process zipped OneDrive folders before without any problems. The Wikipedia entry on ZIP states that originally the format had a 4 GiB limit on the total size of the archive (as well as both the uncompressed and compressed size of a file). To overcome these limitations, a “ZIP64” extension was added to the format in version 4.5 of the ZIP specification (which was published in 2001). To be sure, I verified that both unzip and 7-zip on my machine support ZIP641.

Small OneDrive ZIP and home-rolled large ZIP

I did some additional tests to verify if my problem could be a ZIP64-related issue. First I downloaded a smaller (<4 GB) folder from OneDrive, and tried to extract the resulting ZIP file with unzip and 7-zip. Both were able to extract the file without any issues. Next I created two 8 GB ZIP files from data on my local machine with both the zip and 7-zip tools. I then tried to extract both files with both unzip and 7-zip (i.e. I extracted each file with both tools). Again, both extracted these files without any problems. Since these tests demonstrate that both unzip and 7-zip are able to handle both large ZIP files (which by definition use ZIP64) as well as smaller OneDrive ZIP files, this suggests that something odd is going on with OneDrive’s implementation of ZIP64.

Testing the ZIP file integrity

The zip tool has a switch that can be used to test the integrity of a ZIP file. I ran it on the problematic file like this:

zip -T kb-4d8a2f9a-5e0b-11ea-9376-40b0341fbf5f.zip

Here’s the result:

Could not find:

kb-4d8a2f9a-5e0b-11ea-9376-40b0341fbf5f.z01

Hit c (change path to where this split file is)

q (abort archive - quit)

or ENTER (try reading this split again):

So, apparently the zip utility thinks this is a multi-volume archive (which it isn’t). Running this command on any of my other test files (the small OneDrive file, and the large files created by zip and 7-zip) didn’t result in any errors.

Tests with Python’s zipfile module

The Python programming language by default includes a zipfile module, which has tools for reading and writing ZIP files. So, I wrote the following script, which opens the ZIP file in read mode, and then reads its contents (I used Python 3.6.9 for this):

import zipfile

# Open ZIP file

myZip = zipfile.ZipFile("kb-4d8a2f9a-5e0b-11ea-9376-40b0341fbf5f.zip",

mode='r')

# Read all files in archive and check their CRCs and file headers.

myZip.testzip()

# Close the ZIP file

myZip.close()

Running the script raised the following error:

zipfile.BadZipFile: zipfiles that span multiple disks are not supported

This looks somewhat related to the outcome of zip’s integrity test, which reported a multi-volume archive. Looking at the source code of the zipfile module shows that this particular error is raised if a check on 2 data fields from the “zip64 end of central dir locator” fails:

if diskno != 0 or disks > 1:

raise BadZipFile("zipfiles that span multiple disks are not supported")

Here’s the description of this data structure in the format specification:

zip64 end of central dir locator

signature 4 bytes (0x07064b50)

number of the disk with the

start of the zip64 end of

central directory 4 bytes

relative offset of the zip64

end of central directory record 8 bytes

total number of disks 4 bytes

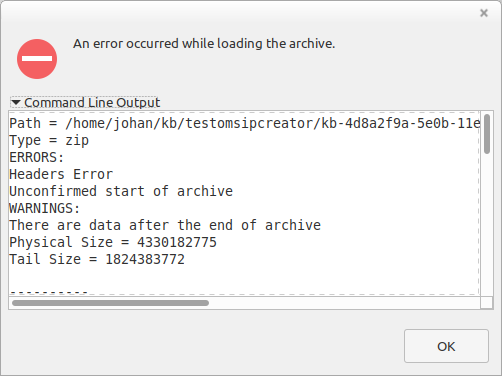

Python’s zipfile raises the error if either the value of the “number of the disk with the start of the zip64 end of central directory” field (variable diskno) isn’t equal to 0, or the “total number of disks” (variable disks) is larger than 1. So, I opened the file in a Hex editor, and zoomed in on the “zip64 end of central dir locator”:

Here, the highlighted bytes (0x504b0607) make up the signature of the “zip64 end of central dir locator”2. The 4 bytes inside the blue rectangle contain the “number of the disk” value. Here, its value is 0, which is the correct and expected value. The 4 bytes inside the red rectangle contain the “total number of disks” value, which is also 0. But this is really odd, since neither value should trigger the “zipfiles that span multiple disks are not supported” error! Also, a check on the 8 GB ZIP files that I had created myself with zip and 7-zip showed both to have a value of 1 for this field. So what’s going on here?

Digging into zipfile’s history

The most likely explanation I could think of, was some difference between my local version of the Python zipfile module and the latest published version on Github. Using Github’s blame view, I inspected the revision history of the part of the check that raises the error. This revealed a recent change to zipfile: prior to a patch that was submitted in May 2019, the offending check was done slightly differently:

if diskno != 0 or disks != 1:

raise BadZipFile("zipfiles that span multiple disks are not supported")

Note that in the old situation the test would fail if disks was any value other than 1, whereas in the new situation it only fails if disks is greater than 1. Given that for our OneDrive file the value is 0, this explains why the old version results in the error. The Git commit of the patch also includes the following note:

Added support for ZIP files with disks set to 0. Such files are commonly created by builtin tools on Windows when use ZIP64 extension.

So could this be the vital clue we need to solve this little file format mystery? Re-running my Python test script with the latest version of the zipfile module did not result in any reported errors, so this looked hopeful for a start. But is the 0 value of “total number of disks” also the thing that makes unzip and 7-zip choke?

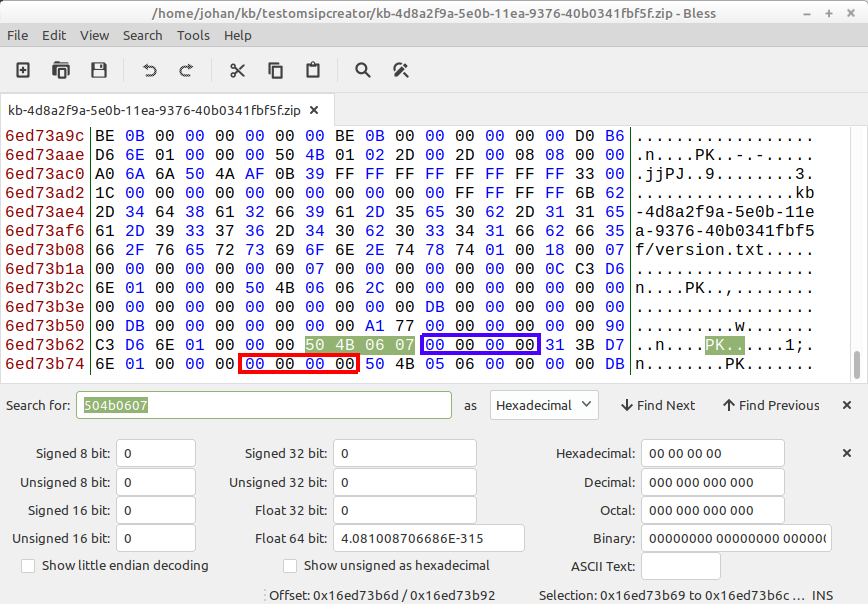

Hacking into the OneDrive ZIP file

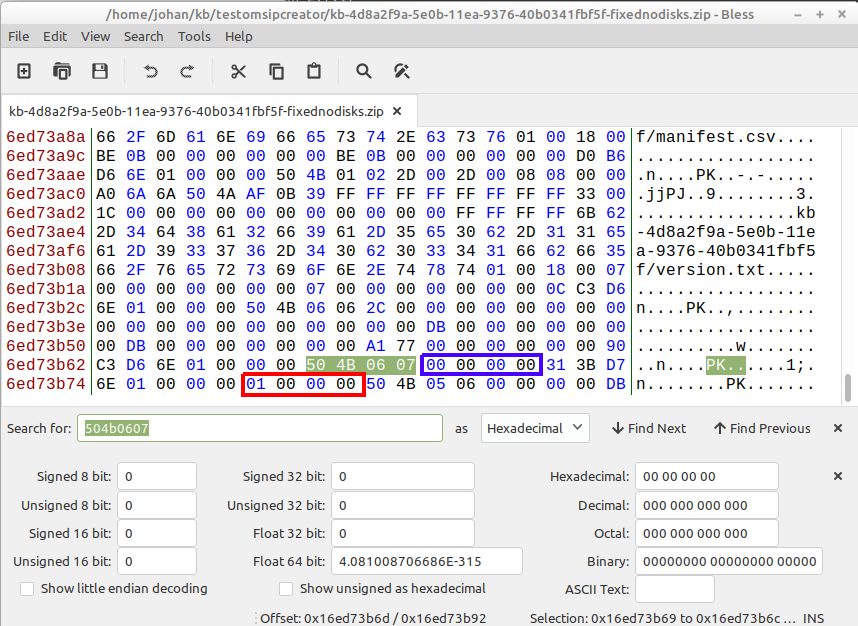

To put this to the test, I first made a copy of the OneDrive ZIP file. I opened this file in a Hex editor, and did a search on the hexadecimal string 0x504b0607, which is the signature that indicates the start of the “zip64 end of central dir locator”3. I then changed the first byte of the “total number of disks” value (this is the 13th byte after the signature, indicated by the red rectangle in the screenshot) from 0x00 to 0x01:

This effectively sets the “total number of disks” value to 1 (unsigned little-endian 32-bit value). After saving the file, I repeated all my previous tests with unzip, 7-zip, as well as zip’s integrity check. The modified ZIP file passed all these tests without any problems! The contents of the file could be extracted normally, and the extraction is also complete. The file can also be opened normally in Linux Mint’s archive manager, as this screenshot shows:

So, it turns out that the cause of the problem is the value of one field in the “zip64 end of central dir locator”, which can be provisionally fixed by nothing more than changing one single bit!

Other reports on this problem

If a widely-used platform by a major vendor such as Microsoft produces ZIP archives with major interoperability issues, I would expect others to have run into this before. This question on Ask Ubuntu appears to describe the same problem, and here’s another report on corrupted large ZIP files on a OneDrive-related forum. On Twitter Tyler Thorsted confirmed my results for a 5 GB ZIP file downloaded from OneDrive, adding that the Mac OS X archive utility didn’t like the file either. Still, I’m surprised I couldn’t find much else on this issue.

Similarity to Apple Archive utility problem

The problem looks superficially similar to an older issue with Apple’s Archive utility, which would write corrupt ZIP archives for cases where the ZIP64 extension is needed. From the WikiPedia entry on ZIP:

Mac OS Sierra’s Archive Utility notably does not support ZIP64, and can create corrupt archives when ZIP64 would be required.

More details about this are available here, here and here. Interestingly a Twitter thread by Kieran O’Leary put me on track of this issue (which I hadn’t heard of before). It’s not clear to me if the OneDrive problem is identical or even related, but because of the similarities I thought it was at least worth a mention here.

Conclusion

The tests presented here demonstrate how large ZIP files exported from the Microsoft OneDrive web client cannot be read by widely-used tools such as unzip and 7-zip. The problem only occurs for large (> 4 GiB) files that use the ZIP64 extension. The cause of this interoperability problem is the value of the “total number of disks” field in the “zip64 end of central dir locator”. In the OneDrive files, this value is set to 0 (zero), whereas most reader tools expect a value of 1. It is debatable whether the OneDrive files violate the ZIP format specification, since the spec doesn’t say anything about the permitted values of this field. Affected files can be provisionally “fixed” by changing the first byte of the “total number of disks” field in a hex editor. However, to ensure that existing files that are affected by this issue remain accessible in the long term, we need a more structural and sustainable solution. It is probably fairly trivial to modify existing ZIP reader tools and libraries such as unzip and 7-zip to deal with these files. I’ll try to get in touch with the developers of some of these tools about this issue. Ideally things should also be fixed on Microsoft’s end. If any readers have contacts there, please bring this post to their attention!

Test file

I’ve created an openly-licensed test file that demonstrates the problem. It is available here:

https://zenodo.org/record/3715394

Update (17 March 2020)

For unzip I found this ticket on the Info-Zip issue tracker, which looks identical to the problem discussed in this post. The ticket was already created in 2013, but its current status is not entirely clear.

For 7-zip, things are slightly complicated by the fact that for Unix a separate p7zip port exists, which currently is 3 major releases behind the main 7-zip project. In any case, I’ve just opened this feature request in the p7zip issue tracker.

Meanwhile Andy Jackson has been trying to get this issue to the attention of Microsoft, so let’s see what happens from here.

Fix-OneDrive-Zip script (update 8 June 2020)

In the comments section, Paul Marquess posted a link to a small Perl script he wrote that automatically updates the “total number of disks” field of a problematic OneDrive ZIP file. The script is available here:

https://github.com/pmqs/Fix-OneDrive-Zip

I ran a quick test with my openly-licensed test file, using the following command:

fix-onedrive-zip onedrive-zip-test-zeros.zip

After running the script, the file was indeed perfectly readable. Thanks Paul!

Revision history

- 14 March 2020: added analysis with Python zipfile, and updated conclusions accordingly.

- 17 March 2020: added update with links to Info-Zip and p7zip issue trackers.

- 18 March 2020: added link to test file.

- 8 June 2020: added reference to Fix-OneDrive-Zip script by Paul Marquess.

-

For unzip you can check this this by running it with the

--versionswitch. If the output includesZIP64_SUPPORTthis means ZIP64 is supported. ↩ -

Note that this is the big-endian representation of the signature, whereas the ZIP formation specification uses little-endian representations. See more on endianness here. ↩

-

Since the “zip64 end of central dir locator” is located near the end of the file, the quickest way to find it is to scroll to the very end of the file in the Hex editor, and then do a reverse search (“Find Previous”) from there. ↩

-

preservation-risks

- Escape from the phantom of the PDF

- Multi-image TIFFs, subfiles and image file directories

- Identification of PDF preservation risks with VeraPDF and JHOVE

- On The Significant Properties of Spreadsheets

- PDF processing and analysis with open-source tools

- ISO/IEC TS 22424 standard on EPUB3 preservation

- Does Microsoft OneDrive export large ZIP files that are corrupt?

- Why PDF/A validation matters, even if you don't have PDF/A - Part 2

- Why PDF/A validation matters, even if you don't have PDF/A

- Measuring Bigfoot

- Assessing file format risks: searching for Bigfoot?

- PDF – Inventory of long-term preservation risks

- EPUB for archival preservation

Comments

The corrupt zip files createad on macOS created by Finder.app, ditto, Archive utility etc. can in fact be opened by 7-zip v.17.01 beta onwards. Unfortunately only older versions are ported to platforms other than Windows. Maybe the newer versions of 7-zip for Windows are able to extract also the corrupted zip files created by OneDrive?

I do not know of any other utility outside of Apples own tools (Finder, ditto, Archive Utility) except recent versions of 7-zip for Windows to extract these kind of files. Does anyone know of another tool, preferably with a FLOSS license and natively runnig on Linux?

I also tried (and failed) to convince the info-zip developers to add support:

@matmat Thanks, this is some really useful information. Don’t have access to any Windows machine right now (and because of the current COVID-19 outbreak I won’t for at least the coming 2 weeks), but I’ll give this a try later. (Side note: your comment made me realize the formatting of the comments section of my blog needs some work, will look at this later!)

If preserving file permissions isn’t an issue when unzipping, you could try using

jar. It can work in streaming-mode and read a zip file from stdin. That will bypass the problem with the incorrect value in the central directory (because it doesn’t read it).Usage is

jar xvf /dev/stdin <file.zipThere are other programs that can unzip in streaming mode.

@pmqs Interesting!

Unfortunately it errors out with this for me testing on a 33G file created with macOS Finder.app:

Is there a way to increase the buffer? What other programs can unzip in streaming mode?

@matmat That suggests to me that

jarmay support streaming for input, but output needs buffering. Not sure why it needs to do that (I have a streaming unzip in development and it doesn’t need to buffer data before outputting). I don’t use jar, so can’t really comment. No idea how to increase the buffer size.I had a link that referenced some other streaming unzippers, but can’t find it. Try searching for the terms “stream unzip”

The only one I can remember offhand is https://github.com/madler/sunzip

@pmqs Thanks!

Tried sunzip on a Finder.app created zip-file of the same (~33G) size, which gives a similair error to what happens when you try to unzip these files with a non-stream unzip program (info-zip etc.):

To this day the only tool I have found working for these files outside of Apple’s own is 7-zip for Windows :(

@matmat Are there any examples of the zip file available online anywhere. I can try my code against it & see what I get.

@pmqs I’d come across the suggestion to use jar for this before, but when I try it gives me the following error:

Strangely the very same error also happens for smaller OneDrive files (that don’t use ZIP64), but it does work for ZIP files that I create myself. I suspect this error is unrelated to ZIP64, but related to the fact that the items in the OneDrive ZIPs aren’t flate-compressed.

@pmqs I am uploading a zip file to you for testing! Please do not distribute. Given some time I could probably produce a file that can be freely shared. Let me know if you need anything else.

This file extracts correctly with Apple tools and also with latest 7-zip on Windows.

@matmat Getting back to your first comment: I can now confirm that the 20.0 alpha version of 7-zip for Windows is able to extract the OneDrive files. See also this ticket I opened for the p7zip port. Hopefully these changes will be ported over to p7zip. I also created an openly-licensed test file, available here (added the link to the blog post as well):

https://zenodo.org/record/3715394

@bitsgalore Hopefully! But the latest port version was for version 16.02 released almost four years ago: https://sourceforge.net/projects/p7zip/files/p7zip/ so I would not hold my breath.

Thanks - give me a shout when that’s available & where it is uploaded to.

@matat I downloaded the enormous zip file file you sent me. No luck though. I’m afraid my code is too immature to deal with it.

This archive uses none of the Zip64 extension that must be used when the fie is > 4Gig.

At the end of the file the

End of central directory recordis present as expected, but there is noZip64 end of central directory locatorrecord at all. Similarly the extra Zip64 fields that should be present in thecentral directory headersare not presentThis is just a 32-bit zip file that has had 64-bit data shoe-horned into it. All the 32-bit fields have just overflown.

A seriously badly-formed zip file.

Johan, fifteen minutes ago I tried to unzip in Linux Mint a 4.2GiB file from OneDrive, and ran into the same problem. I read your post, changed one byte (actually one bit): problem solved! Thanks! Gertjan

@snegel Thanks for reporting back, good to hear this is working for others as well!

Thanks a lot, your trick solved the problem for me as well! 💯

I created a small script to do the one byte update of these OneNote zip files. See Fix-OneDrive-Zip for more details.

We recently started using Microsoft OneDrive at work (by using Web Interface) and I have to upload 20-30Gb of big zipped files (2-5Gb each one) every day. I already tried to use a lot of tools (WinZIP, 7-ZIP, WinRAR, and JZip), and I always had this kind of issue (corrupted files)

I think the problem is the OneDrive service, cause when I upload these files to other servers (such as GDrive) I never have this kind of issue.

@pmqs Thanks for posting the link to your script, this is really useful! I just added another update to my post in which I refer to it.

@brunoicq Apart from the bad ZIP file problem OneDrive has various other issues that make it a complete pain to use. As an example, see the below Twitter thread on my attempt at uploading some 6 GB of data through the web interface:

https://twitter.com/bitsgalore/status/1240006157755977729

Apart from that the OneDrive web client also resets the time stamps (creation and last-modified date and time) on any uploaded files. From what I can gather both issues only occur when you use the web interface (the native Windows client seems to work pretty well).

Hi there,

I’ve been trying to figure this issue out for months now - it’s beyond frustrating (same thing happens to me, when I download large files/folders from OneDrive or SharePoint, the zip is corrupt). However, I have no coding background and am just a regular Mac/PC end user that’s just been frustrated and searching on Google how to fix this and came across this thread.

With the script fix, how can I run this on my Mac? Step by step would be much appreciated.

Thank you so much!

This was super helpful information. Thank you. But when I tried to run the fixer on a 20GB archive, I get

Error: Cannot find Zip signature at end of ‘OneDrive_1_8-19-2020.zip’

It’s an archive consisting of 25 video files, each about 1.5GB. It was generated by OneDrive when I selected all the files for download at once. Downloading them one at a time is going to be a pain but I might as well get started.

Can you run zipdetails against the OneDrive file and post the results or add to a gist?

Also, run

perl -V(note that is uppercase V) and post the output.It tried zipdetails but after 12 hours I cancelled it. I’m going to try it again over night. Full output from perl -V Summary of my perl5 (revision 5 version 18 subversion 4) configuration:

Platform: osname=darwin, osvers=19.0, archname=darwin-thread-multi-2level uname=’darwin osx397.sd.apple.com 19.0 darwin kernel version 18.0.0: tue jul 9 11:12:08 pdt 2019; root:xnu-4903.201.2.100.7~1release_x86_64 x86_64 ‘ config_args=’-ds -e -Dprefix=/usr -Dccflags=-g -pipe -Dldflags= -Dman3ext=3pm -Duseithreads -Duseshrplib -Dinc_version_list=none -Dcc=cc’ hint=recommended, useposix=true, d_sigaction=define useithreads=define, usemultiplicity=define useperlio=define, d_sfio=undef, uselargefiles=define, usesocks=undef use64bitint=define, use64bitall=define, uselongdouble=undef usemymalloc=n, bincompat5005=undef Compiler: cc=’cc’, ccflags =’ -g -pipe -fno-common -DPERL_DARWIN -fno-strict-aliasing -fstack-protector’, optimize=’-Os’, cppflags=’-g -pipe -fno-common -DPERL_DARWIN -fno-strict-aliasing -fstack-protector’ ccversion=’’, gccversion=’4.2.1 Compatible Apple LLVM 11.0.3 (clang-1103.0.29.20) (-macos10.15-objc-selector-opts)’, gccosandvers=’’ intsize=4, longsize=8, ptrsize=8, doublesize=8, byteorder=12345678 d_longlong=define, longlongsize=8, d_longdbl=define, longdblsize=16 ivtype=’long’, ivsize=8, nvtype=’double’, nvsize=8, Off_t=’off_t’, lseeksize=8 alignbytes=8, prototype=define Linker and Libraries: ld=’cc’, ldflags =’ -fstack-protector’ libpth=/usr/lib /usr/local/lib libs= perllibs= libc=, so=dylib, useshrplib=true, libperl=libperl.dylib gnulibc_version=’’ Dynamic Linking: dlsrc=dl_dlopen.xs, dlext=bundle, d_dlsymun=undef, ccdlflags=’ ‘ cccdlflags=’ ‘, lddlflags=’ -bundle -undefined dynamic_lookup -fstack-protector’

Characteristics of this binary (from libperl): Compile-time options: HAS_TIMES MULTIPLICITY PERLIO_LAYERS PERL_DONT_CREATE_GVSV PERL_HASH_FUNC_ONE_AT_A_TIME_HARD PERL_IMPLICIT_CONTEXT PERL_MALLOC_WRAP PERL_PRESERVE_IVUV PERL_SAWAMPERSAND USE_64_BIT_ALL USE_64_BIT_INT USE_ITHREADS USE_LARGE_FILES USE_LOCALE USE_LOCALE_COLLATE USE_LOCALE_CTYPE USE_LOCALE_NUMERIC USE_PERLIO USE_PERL_ATOF USE_REENTRANT_API Locally applied patches: /Library/Perl/Updates/ comes before system perl directories

installprivlib and installarchlib points to the Updates directory

Built under darwin

Compiled at Jun 5 2020 17:34:07

@INC:

/Library/Perl/5.18/darwin-thread-multi-2level

/Library/Perl/5.18

/Network/Library/Perl/5.18/darwin-thread-multi-2level

/Network/Library/Perl/5.18

/Library/Perl/Updates/5.18.4

/System/Library/Perl/5.18/darwin-thread-multi-2level

/System/Library/Perl/5.18

/System/Library/Perl/Extras/5.18/darwin-thread-multi-2level

/System/Library/Perl/Extras/5.18

It should run in seconds. If it is taking that long to run I suspect it is attempting to find the end of the Zip Central Directory record by scanning backwards from the end of the file. After 12 hours it really should have found it. That suggests it isn’t there.

Try running with the

--scanoption – that will get the program to work from the start of the filezipdetails -v --scan 'OneDrive_1_8-19-2020.zip'You should start to see output immediately. Given the size of the file it may take a while to run. The part we need to see is the

END CENTRAL HEADERat the end - it looks like thisThat looks fine.

just fixed a 50GB zip from one drive with this in seconds. Thank-you SO much. What a lifesaver.

Nice analysis Johan. I found this page only after having gone through the pain of diagnosing this problem for myself, in our case when we created huge zip files using windows “send to-> compressed (zipped) folder”. No joy from microsoft support. If anyone for any reason wants a Compiled/C solution, the utility for fixing MS zip files I wrote before finding pmqs’s script is at https://github.com/stripydog/fixmszip.git Should work on big and little endian systems (although I haven’t actually tested on big endian yet…)

I am a total new guy to this so forgive the question. But how do I install the code to fix extraction errors?

Thank you very much indeed for the thorough analysis, and excellent documentation. I was running into the same issue with +4GiB downloads from OneDrive: I was using 7-zip version 16.x (64-bit), and while it worked fine for all OD downloads smaller than 4GiB, it balked at any that were larger along the lines of the problem description in your article. I have just installed 7-zip version 19.00 (also 64-bit), and that works just fine on exactly the same large OD downloads. I have also tried the “unzip” (x64) version that is currently available from the OpenSUSE Linux 15.2 update service, which equally works just fine with these larger files. So touching up the bits to make it pass may no longer be required.

Take a look at the comment that I just added - you may no longer need this work-around.

Just found from my own experience that there is this 4GB limit, had started downloading individual files, and saw this.

Thank you for the explanation @bitsgalore

@hellmi-pelmi unfortunately the latest version of 7z isn’t available for Linux. Will have to go with this work-around

@pmqs The script unfortunately didn’t resolve the zip file issue.

I have a zip file of 20GB.

fix-onedrive-zipexecuted properly on the file, and now when I try to rerun I get :However, the zip file is still marked as corrupt

I’m wondering if

total number of disksfield could be values greater than 1 too, I mean if file sizeis 2 * 4GB - total number of disks field expected to be 1

if 3 * 4GB - then 2,

something like that

The reason I suspected this is because I tried the script on a smaller zip file of 4.1 GB and that worked fine

That output suggests there is more wrong with your zip file than just the

Total Number of Disksissue. Looks like more serious corruption. That could be mean the file has got corrupted while downloading, or it has the corruption cooked into the original file. Can you share any details on how the file was created?Also, is

RNA.zipavailable to download?Suspect it won’t make any difference, but have you tried testing the zip file using

unzip -t RNA.zip?That is an old version of

zipdetailsyou are running. Can you try with the latest version that is available here. If you still have a copy ofRNA.zipbefore you ranfix-onedrive-zipcan you runzipdetailswith it.If you get the error

Cannot find 'Zip64 end of central directory record': 0x06054b50can you runzipdetails --scan -v RNA.zipThat may take a very long time to run, so you need to be patient.

No - that field has nothing to do with the overall size of the zip file. It is a legacy feature that dates back to a time when you had to split zip files across multiple floppy disks.

Thank you for your response @pmqs

The original files are not corrupt. The directory contained 121 fastq.gz files and 1 md5sum text file. Since I was left with no other choice, I downloaded each of the files individually, verified it with the md5sum file and it matched.

I had tried downloading different combinations of subsets of these 121 files - those less than total zip file size of 4gb unzipped properly - others in range of 4gb to 8gb unzipped properly after running your script. So the original files are not corrupt.

Sadly, I’m not allowed to share the RNA.zip file publicly.

output of unzip added below

I will try with latest zipdetails

Thanks so much for posting this. I was tearing my hair out trying to figure out why I couldn’t open a batch of 9 GB files I’d downloaded from a colleague’s OneDrive. My Mac refused to open them (“Error 79- inappropriate file type or format”) until I tried the Fix-OneDrive-Zip solution from @pmqs, then voila, they worked!

That pearl script is a life saver! thanks!

Incredibly this is still a problem in September 2022. Thanks a lot @pmqs and agree, that pearl script is awesome!